‘Artificial intelligence has dangerous implications’: Warwick students debate

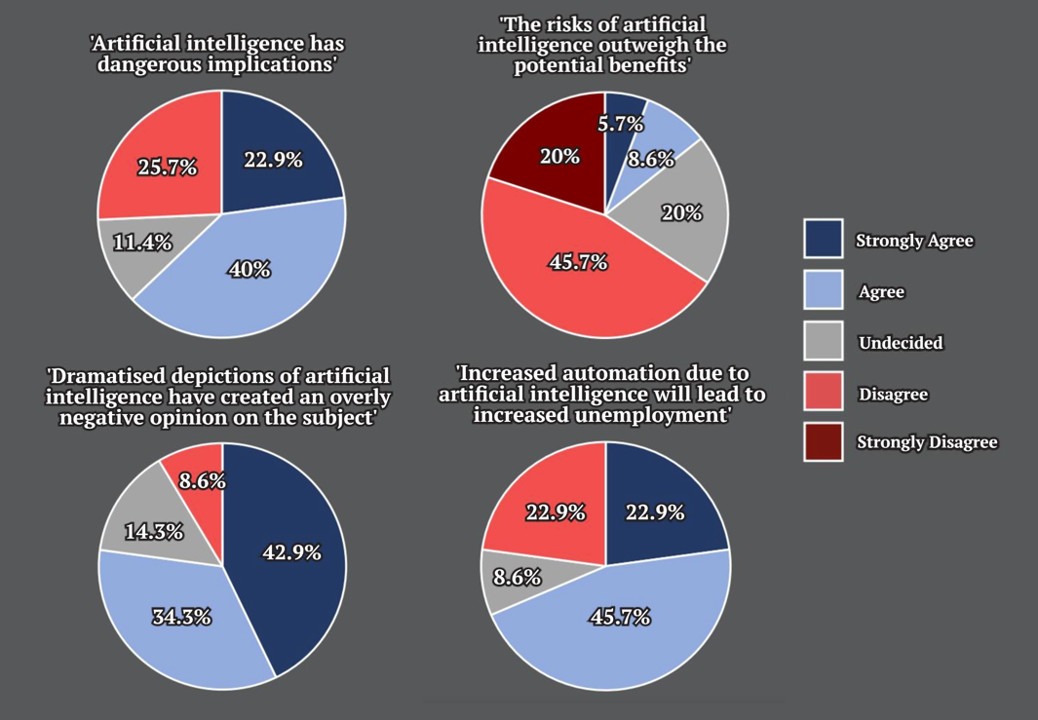

The development of artificial intelligence is a controversial topic with strong arguments on both sides. AI could lead to major technological advancements, but there are also fears it could lead to unemployment, loss of privacy, and machines more intelligent than us. A survey of 35 Warwick students carried out by The Boar Science & Tech found that this disparity of opinion can also be seen in the University of Warwick’s student population, with views varying from those in strong support of AI development to those that are fearful of its future.

With this in mind, Warwick students Henry Jia and Raphael Thurston debate whether artificial intelligence could bring humanity into an exciting future or amplify issues with equality and privacy.

Henry Jia discusses the danger that AI could pose to society

The real dangers of artificial intelligence are often far more overlooked in comparison to the Hollywood-esque apocalypses we are familiar with. One of the biggest threats of AI is the advent of surveillance states. The power of computers to understand and interpret the visual world, known as ‘computer vision’, allows autonomous mass surveillance with very little manpower. This is already being applied in places such as China.

Even in democratic states in the West, we have large marketing companies such as Amazon, Google, and Facebook/Meta which wield AI amorally. Thanks to leaks such as Cambridge Analytica, we know that Facebook has no qualms with using data and AI without regard to the effect on our democracy and society. To effectively develop AI systems, enormous quantities of data and computation are needed, and such resources are mostly only available to these tech titans. We must question how these corporations might progressively use more advanced machine learning methods given their questionable track records.

There is also the danger that AI poses to society due to ignorance. One example of this is the use of AI to predict crime, which has been raised by various police departments, both in the US and the UK. Such ideas would be undemocratic at best, violating the principle of innocent until proven guilty, and downright discriminatory at worst. It is well known among researchers that most AI algorithms are ‘lazy’ and will look for the simplest solution. Many ethnically diverse areas are underfunded and underdeveloped, therefore having greater crime rates. An ignorant person who applies AI to policing would inevitably target these minorities as the system would only reinforce established biases against them.

Artificial intelligence has the possibility of massively increasing inequality

Further to this, most data gathered to train AI systems are in the West and reflect our existing social biases. For example, computer vision systems are more likely to classify white men as doctors and white women as nurses as most images given to them reflect this. People that are unfamiliar with the field of machine learning (ML) and AI would likely claim that AI is objective and unbiased, but this is far from the truth. AI is actually more likely to amplify these prejudices, and the question of how to create socially unbiased artificial intelligence remains a difficult and active area of research.

Lastly, there is the issue of automation. Artificial intelligence won’t create mass unemployment as many scaremongers suggest. Just because computer vision systems can detect certain types of cancer as well as doctors, this does not mean it will replace them. Instead, doctors that are familiar with computer vision and AI will replace those that are not. AI will create and destroy jobs, just as the car replaced the horse and created new jobs for mechanics. Nonetheless, the potential for social change and upheaval are far from small. Artificial intelligence has the possibility of massively increasing inequality, handing great wealth to corporations and individuals who control such technology.

At the end of the day, AI is a brilliant technology. But it is a technology unlike any other that has come before it. It will certainly not usher in a terminator-style apocalypse, but it could cause social upheaval unlike any that has come before, and this article is not exhaustive in the ways AI can be misused. Much of the pain it could inflict is also likely to be on those that are already marginalised.

Raphael Thurston looks to the exciting future of artificial intelligence

Rather than being dangerous, the implications of AI are immensely relieving both in the short-term, best characterised as ‘Artificial Narrow Intelligence’ (ANI), which specialises in a single task, and in the longer-term, as ‘Artificial General Intelligence’ (AGI), which will rapidly surpass human capabilities in practically every domain.

I remember vividly when just under six months ago, I discovered the magical capabilities of the DALL-E 2 art computer model. I enthusiastically added myself to the waiting list, and over the summer I tried it out. The results, albeit occasionally bizarre, were regularly magnificent. It is now publicly accessible, and I recommend giving it a go. I now view DALL-E 2 as one of the first mainstream examples of how AI can elevate our creative and intellectual aspirations.

When talking about the threat automation poses to the world of work, AI is often depicted as a danger. To the dismay of many economists, Universal Basic Income (UBI) is increasingly becoming an attractive prospect as humans are displaced from the workforce. I believe that it is only a matter of time before widespread forms of UBI are implemented, with figures such as 2020 presidential candidate Andrew Yang increasing public awareness. An argument frequently posited by sceptics of UBI is that humans can adapt no matter the economy they find themselves in, with the transformations following the Industrial Revolution serving as an exemplary case of our adaptability. This belief is reasonable within the confines of an economy in which humans remain intellectually superior. However, times are changing, and in an ever-growing list of tasks, ANI is leagues above what any human could ever accomplish, thus disincentivizing the employment of humans in many sectors.

AI is not a danger, but our greatest opportunity to fulfil our potential

This does not make AI dangerous though, as labour should not be our primary source of fulfilment. AI, and with it UBI, could give us both the time and financial freedom to devote more time to our true interests. If you think you’d be bored without a job, your data could easily be analysed to provide your preferences for passions you would never have even considered.

Looking further towards the advent of AGI, we must recognise that AI is not a danger, but our greatest opportunity to fulfil our potential both as individuals and as a species. Understandably, concern is expressed over whether AI can be aligned with our aims, referred to as the ‘control problem’ by computer scientist Stuart Russell. He proposes that we must embed within AI the recognition that it does not necessarily understand the true objective which humans have input, due to our limited knowledge and communicative abilities, even though that is what it must pursue. In short, it must perceive inherent value in interpreting us cautiously.

The development of AGI is likely to be our best chance at solving the many issues of the day, as well as those that have plagued us for millennia. Disease, famine, climate change, nuclear hostility, and mortality itself are among the problems that AGI (which would rapidly become ‘Artificial Superintelligence’ or ASI) could theoretically engineer solutions to, as a result of its vastly superior intellect and its ability to avoid the infighting in our decision-making processes. DeepMind, the leading AI research laboratory, is in what I believe to be the capable and well-meaning hands of Demis Hassabis, and in a race where every second counts, this ought to serve as a reassurance to us all.

Comments