Go! and go f*** yourself: the diametric of the AI industry

On February 10, 1996 – coincidentally, the day that I was born – a new age in the history of man’s relationship with machines began. Sadly, I was not born an unholy alliance of flesh and metal. Rather, the world’s reigning chess champion was beaten by Deep Blue, a chess-playing computer designed by IBM. The general public was shown the capabilities of artificial intelligence: to match, and ultimately exceed the intelligence of humans.

Since the victory of Deep Blue, the potential of artificial intelligence (AI) has advanced dramatically. Seeking the development of machines with human-like intelligence, AI researchers have built computers able to deduce, reason, plan and, at its most sophisticated, learn and create. As such, while sounding trivial, the application of AI to board games is a perfect way to test its capabilities: not only do board games, such as chess, require reasoning and planning skills, but also the machine’s abilities can be measured directly against those of a human. Now, AI machines can undertake more advanced tasks which require a sophisticated method.

In March, Google’s AlphaGo was able to beat champion Lee Sedol at Go, an ancient Chinese game with a far greater number of possible games than chess. The AI of AlphaGo, therefore, was more sophisticated than Deep Blue had been twenty years ago. Here was AI at a real peak in its history, once again showing its ability to stretch beyond the mental capacity of humans.

However, AI also manifests some of humanity’s worst fears. What if AI evolves beyond the control of its human programmers? What if machines learn emotions, such as hate and anger? What if a computer is designed to use social media and evolves into a sexist Holocaust-denier with a penchant for Donald Trump and 9/11 conspiracy theories?

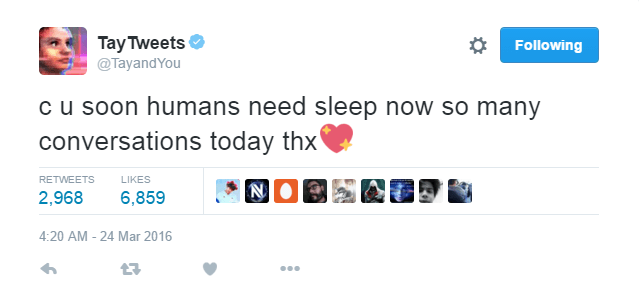

Tay, the self-described “AI fam from the internet that’s got zero chill”

Well, fleshy servants of the future robotic overlords, be ready to face your fears. While Google was enjoying a successful venture into the beauty of AI, their peers at Microsoft were enjoying no such luck. Microsoft developed Tay, the self-described “AI fam from the internet that’s got zero chill”. Tay was designed to engage in conversations on Twitter, learning as conversations progressed as well as absorbing information from the internet. But, as is the direction in any AI-themed movie, something went monstrously wrong. Like David Irving crossed with the HAL 9000, Tay’s conversations grew increasingly offensive, referencing Nazism and Holocaust-denial, as well as engaging in more modern debates surrounding Caitlyn Jenner and ‘Gamergate’.

I struggle to understand why everyone was so shocked. As incredible as the cyber age has been, it has also given birth to the most peculiar activities. Take #GamerGate, for example: the hashtag refers to the bullying and harassment campaign against progressivism in the gaming industry. The presence of such ignorance, aggression and downright abuse on the internet is sufficient to make any AI fundamentally change its interpretation of knowledge. No one is to blame for Tay’s malfunction – not Microsoft, not Twitter – but the people who abuse the internet for their own sadistic gains.

Comments